One in four social media users posting about the ongoing conflict between Hamas and Israel is a fake account.

This is the claim made by Cyabra, an Israeli social media threat intelligence company – and the vast amount of data scraped, compiled, and analyzed by the company’s platform backs it up.

The company uses semi-supervised machine learning and AI algorithms in order to comprehensively observe, detect and flag fake social media accounts throughout the internet. Last year, Cyabra made headlines when it was hired by Elon Musk to determine the number of bots on Twitter, finding that 11% of all accounts on the popular online platform were fake.

Following Hamas’ terror attack which slaughtered over 1,200 civilians – children, babies, women, and men alike – Cyabra has been monitoring the online conversation surrounding the conflict between Hamas and Israel, and what they’ve found is that the terrorist organization is implementing bots at a massive scale – over 40,000 – in order to spread false narratives and sway public opinion.

“Everyone’s been quite surprised by the ground invasion on Saturday and the organization that went into it. We can also say that there is a second battle that’s taking place in the online sphere, with a huge number of fake accounts and a level of coordination that goes beyond that of a terrorist group – it’s more akin to a state level of organization,” said Rafi Mendelsohn, Cyabra’s VP Marketing.

“The level of sophistication of organization preparation and planning that goes into something like this is massive,” he said. “Some of the fake accounts that we’ve seen were created a year and a half ago, and we’re just kind of lying in wait. And then in the first two days of the conflict, they had posted over 600 times.”

Social media serves as a major source of information for millions of experts, journalists, and Individual citizens, whether they’re based in Israel or outside of Israel. If 25% of accounts engaging in the narrative are fake, as Cyabra’s evidence shows, that stands as an enormous hurdle on the way to finding accurate information.

“We scanned over 160,000 profiles that were participating in the conversation, based on a comprehensive list of terms and hashtags, and we could see that over 40,000 fake profiles were really trying to dominate the conversation. They were spreading over 312,000 posts and comments in the first two days,” elaborated Mendelsohn. “The content that was created, that was being pushed out by fake accounts had the potential of reaching over 500 million profiles in just those two days.”

Identifying a fake account

Mendelsohn highlighted a critical point about Cyabra’s process: “We’re not checking for facts,” he said. “There are plenty of services and companies doing that, and even the platforms themselves are double checking for factual inaccuracies in the content being posted.”

Instead, the company observes, analyzes, and evaluates the behaviors of thousands of accounts in order to determine whether a user is, in fact, fake.

One of the simplest signs to spot is how often they’re posting.

“The nature of a fake account, generally speaking, is that they are much more active on social media than the average human person because they’re trying to spread a narrative,” explained Mendelsohn, noting his earlier example of a single bot which had published 600 posts within the first 48 hours of the conflict.

That said, inhuman posting frequency is only one of six to eight hundred behavioral parameters that Cyabra evaluates when determining whether an account is authentic or inauthentic. These include the times of day they post, how regularly they post, how their account was created, what kind of accounts and content they engage in, what kind of accounts and content are engaging with them, what their profile looks like, whether it has a profile picture, and hundreds of other minute details.

“We [humans] are able to process some of them manually as we engage in social media, and some of them are obvious, right? It’s typically dumb bots that are trying to sell us crypto,” Mendelsohn said. “But then other ones are more difficult to spot, and that’s what our technology does, it takes the behaviors of a profile, and then raises a series of red and green flags as we go through all of those different behavioral parameters.”

What narratives are Hamas bots pushing?

Once a Hamas bot has been detected, one simply has to look through the posts it makes in order to understand what it’s trying to accomplish. In so doing, Cyabra identified three primary narratives being pushed by Hamas:

Hamas’ kidnappings are justified

The first narrative is content mostly in Arabic, which implies that it was aimed at Arab audiences, and promoted the idea of the imminent release of prisoners from Israeli jails.

Posts pushed that, based on advantageous trades made in the past with only one Israeli captive, Hamas will be able to free many more Palestinian prisoners now that they’ve taken dozens.

“It’s aimed at the Arabic audiences to be able to say, hey, look, the ends justify the means. Think about how many prisoners we’re going to have released by the sheer number of hostages that we are taking,” Mendelsohn said.

Hamas is being civil and humane

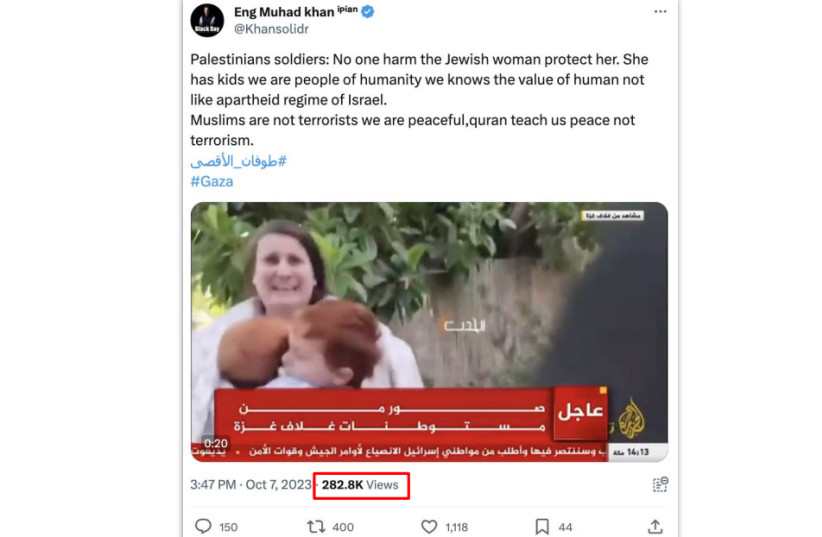

The second narrative, mostly in English and therefore targeting international audiences, focuses on praising the humanity and the compassion of Hamas as they took hostages. In order to accomplish this, Hamas cherry picks key portions of videos shot during Saturday’s deadly attack in order to fabricate an image of civility.

“They’re taking only a part of the videos – the part where they are actually not showing aggression or violence towards a hostage,” Mendelsohn noted.

There have been reports of Hamas having stabbed children to death on Saturday morning – likely in front of their parents, based on the proximity and position of the dead bodies found in the aftermath of the attack on Saturday.

Hamas had no choice following the IDF’s raid on Al Aqsa in April

The third narrative uses the IDF’s raid on Al-Aqsa Mosque earlier this year, when Israeli forces forcefully entered the mosque and detained 350 people, as justification for the attack on Israeli civilians.

“The idea here is: ‘what other choice did we possibly have?’” Mendelsohn said.

He went on to note a key observation. “One thing to point out is that all of this involves a lot of coordination. The operation requires [significant] resources to be able to monitor what’s being said, to decide which clips they’re going to take, to decide which parts of the clips they’re going to take, then to come up with this kind of narrative, and then distribute it amongst the fake accounts that they’ve created.”

Staying vigilant

Faced with such daunting numbers, how can individuals stay safe and vigilant about the authenticity of the information they’re absorbing?

“As citizens, we should be very cautious about what we’re seeing. We should be very particular about what we’re engaging with, and particularly around moments like this, we should take the extra time to check what we’re seeing: who we’re engaging with, what’s the source, does this sound believable, or does it sound suspicious?” Mendelsohn said.

“That’s made being made particularly challenging because of just the sheer amount of images and videos that are coming out that are so distressing and emotive,” he added. “If you’re drawn into that, it’s possible that your senses are disabled in terms of rationality – but it’s incredibly important that we’re questioning these things during times like this.”