X, formerly Twitter, has reportedly become a hotbed of hate, according to the results or a recent experiment from the Center for Countering Digital Hate (CCDH) found in an experiment conducted in the last two weeks.

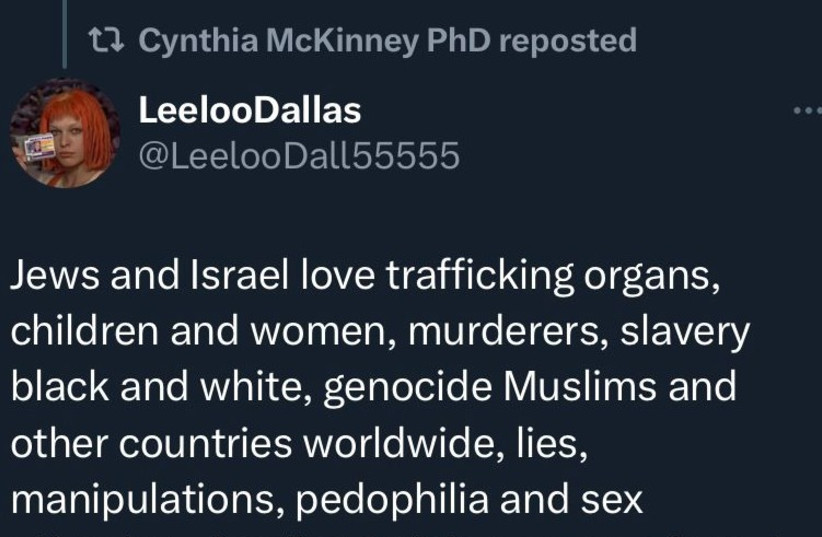

The CCDH reported 200 posts, which they classified as antisemitic, Islamophobic, or anti-Palestinian. Of the 200 posts reported, 196 were allowed to remain on the site.

The CCDH confirmed that all 200 posts, from 101 different X users, breached X’s platform rules on hateful content.

Hateful online content, and hate crimes offline, have increased since the start of the Israel-Hamas war, claimed the CCDH. All the reported content was somehow connected to the conflict.

Examples of hateful content

One of the posts, which X has limited the ability to interact with due to its “Violent Speech”, shared a meme of offensive depictions of a Jewish person and an Arab person alongside a white person. Both the Arab and the Jew ask the white person to “pick a side”, to which the white person responds “I hope you kill each other.”

Only two reported posts that were sent to the Jerusalem Post did not have visibility limited in any way. The first post, from white supremacist Jackson Hinkle, showed footage of the attempted Dagestan airport pogrom. The second claimed that “Palestine as a region is cited in the Bible but the Palestinians don't exist as a people.”

Only 1, out of 101, accounts sharing the prohibited content was suspended, and a further 2 were “locked.”

Imran Ahmed, CEO and founder of the Center for Countering Digital Hate (CCDH), said: “After an unprecedented terrorist atrocity against Jews in Israel, and the subsequent armed conflict between Israel and Hamas, hate actors have leaped at the chance to hijack social media platforms to broadcast their bigotry and mobilize real-world violence against Jews and Muslims, heaping even more pain into the world.

“X has sought to reassure advertisers and the public that they have a handle on hate speech – but our research indicates that these are nothing but empty words.

“Our ‘mystery shopper’ test of X’s content moderation systems – to see whether they have the capacity or will to take down 200 instances of clear, unambiguous hate speech – reveals that hate actors appear to have free rein to post viciously antisemitic and hateful rhetoric on Elon Musk’s platform.

“This is the inevitable result when you slash safety and moderation staff, put the Bat Signal up to welcome back previously banned hate actors, and offer increased visibility to anyone willing to pay $8 a month. Musk has created a safe space for racists, and has sought to make a virtue of the impunity that leads them to attack, harass and threaten marginalized communities.”