New research at the Hebrew University of Jerusalem is looking to understand the computing power of a neuron in order to improve our understanding of deep learning networks.

Deep learning networks are based on the same principles that form the structure of our brain, and are composed of artificial nerve cells connected via artificial synapses.

Present-day artificial neurons are able to generate two output states - "0" for off and "1" for on, based on our understanding of neural function that dates back to the fifties.

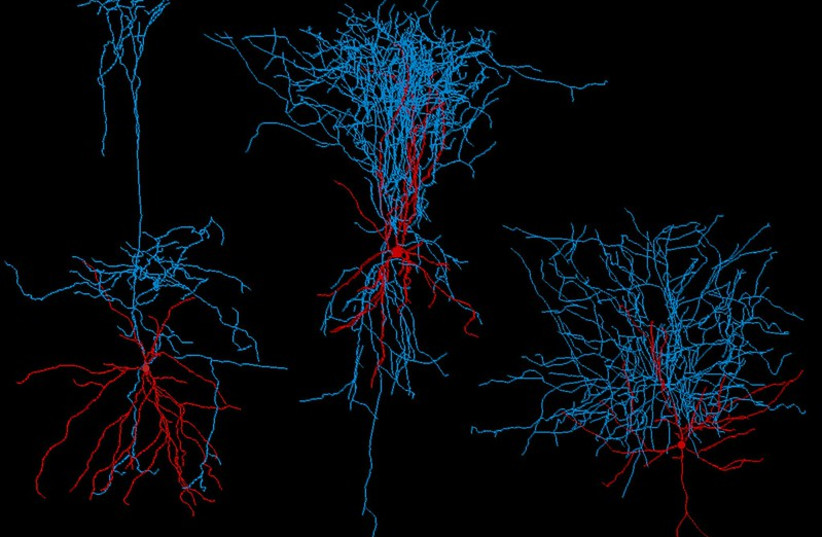

However, the field of neuroscience has evolved in recent decades and has discovered that individual neurons are built from complex branching systems that contain many functioning sub-regions. The branching structure of neurons and the many synapses imply that single neurons might behave as an extensive network.

Ph.D. student David Beniaguev, along with Profs. Michael London and Idan Segev at HU’s Edmond and Lily Safra Center for Brain Science (ELSC) have published their findings in the peer-reviewed journal Neuron.

Their objective is to understand how individual nerve cells translate synaptic inputs to their electrical output. They hope to create a new kind of deep-learning artificial infrastructure that will act more like a human brain.

“The new deep learning network that we propose is built from artificial neurons whereby each of them is already 5-7 layers deep. These units are connected, via artificial synapses, to the layers above and below them,” Segev explained.

Significant research is being focused on providing artificial deep learning with more intelligent and all-encompassing abilities. Deep learning has not been able to achieve the ability to process and correlate between different stimuli.

Currently, deep neural networks (DNN) work with every artificial neuron, responding to synapses with a "0" or a "1," based on the synaptic strength it received from the previous layer. The strength allows the synapse to either send or withhold a signal to the neurons in the next layer.

This allows the system to recognize specific stimuli, and specific systems need to be put in place to recognize connections.

"Our approach is to use deep learning capabilities to create a computerized model that best replicates the I/O properties of individual neurons in the brain” Beniaguev explained.

“The end goal would be to create a computerized replica that mimics the functionality, ability and diversity of the brain,” Segev said: "to create, in every way, true artificial intelligence."